In the popular imagination, quantum physicists are probably best known for the inability to tell a living cat from a dead one. Thus, they might not be the first port of call for someone trying to sort out the tangle of cause-and-effect and confounding variables in, say, a massive drug trial.

That, it turns out, would be an unfortunate mistake because a trio of quantum foundations researchers at Perimeter Institute have made a substantial new contribution to the study of causation.

In two papers – the first published in the Journal of Causal Inference, the second currently on the arXiv – they introduce a new technique that helps unravel part of that causal complexity and has promise to prove even more powerful as computing capabilities increase.

Perimeter Faculty member Robert Spekkens and postdoctoral fellows Tobias Fritz and Elie Wolfe have used approaches inspired by fundamental quantum physics to create a new tool that helps identify, for given correlations, which causal accounts of the correlations are viable and which are not.

Wolfe and Miguel Navascués, from the Institute for Quantum Optics and Quantum Information at the Austrian Academy of Sciences, then showed that the technique can, in principle, generate all the constraints on correlations that are implied by a given causal structure (with the important corollary: the higher the accuracy, the greater the computational cost).

If this seems unusual territory for quantum researchers, that’s because it is. The field of “causal inference” (essentially, the science of cause and effect) has traditionally been pursued by philosophers, statisticians, and computer scientists, but also researchers from fields such as epidemiology, economics, and health science, where questions about causal mechanisms are of central importance. These days, it is perhaps best characterized as a branch of machine learning – indeed, Turing Award winner and artificial intelligence (AI) pioneer Judea Pearl has emphasized that for AI to replicate the way a person thinks, it will have to encompass understandings of cause and effect.

Quantum physicists are a relatively new addition to the mix of disciplines working on the problem, but the work coming out of Perimeter shows that they can make an outsized contribution, with potential impact across the spectrum of fields in which these tools are applied

Causal structures

Figuring out what causes what is not as easy as it sounds. When two observable quantities are found to be correlated, it can be tempting to imagine that one determines the other, but such a conclusion may be mistaken – it may be that the source of the correlation lies deeper, in a common cause that is unseen and that determines both.

Perimeter Faculty member Robert Spekkens.[/caption]

This is partly because, when talking about causality, one enters a realm of nearly endless probabilities. Take health data as an example. Medical researchers might test a cancer drug and find high rates of recovery for women over the age of 60. Is that because the drug works, or because women over 60 have a higher likelihood of spontaneous remission? Without careful analysis – and sometimes even with it – the data can’t tell you which connection is the right one.

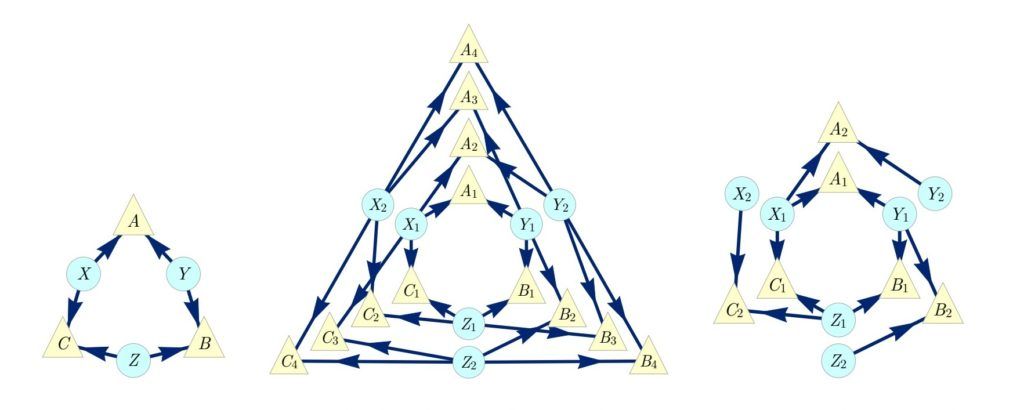

To wade through this thicket of possible causal alternatives, researchers use causal diagrams – a visual formalism that represents each set of possible causal relationships (called a causal structure) as a graph.

The nodes in these graphs can depict two different kinds of variables: those that are observed and those that are unobserved (i.e., hidden). An arrow connecting a pair of nodes indicates a direct cause-effect relationship between the corresponding variables.

Graphs that include one or more hidden variables are the most challenging to analyze, but they are the ones that are expected to be of most practical interest – whether the system in question is a biological organism, the world economy, or human behaviour, there are typically unobserved factors that are causally relevant.

In their paper, “The Inflation Technique for Causal Inference with Latent Variables,” Wolfe, Spekkens, and Fritz propose a new technique for solving the version of the problem with hidden variables.

“Hidden variable” is a term that is also bandied around by quantum physicists. That’s no accident. Indeed, the story of the inflation technique begins with a seminal result in the foundations of quantum theory.

Bell inequalities – and beyond

In the 1960s, physicist John Stewart Bell realized that there was a limit to how correlated the measured properties of two particles could be in a classical world (i.e., a world governed by the laws of classical physics rather than those of quantum mechanics).

If, for example, the particles are separated by a great distance and the measurement on the first particle is synchronized with the measurement on the second, then even a signal travelling at the maximum possible speed (that of light) would be too slow to inform one particle about which measurement was implemented on the other. Assuming the speed of light is a limit on the speed of causal influences, the choice of what to measure on one particle cannot possibly have a causal influence on the outcome of the measurement on the other particle, so any correlations must be due to a common cause. Bell then showed that there was a limit to the strength of correlations that could be obtained from a common cause.

Perimeter researcher Elie Wolfe.[/caption]

He formalized this limit in terms of “Bell inequalities.” He also showed that quantum theory predicted that one could violate these inequalities. Therefore, if an experimentalist observes correlations that violate the Bell inequalities, they can conclude that there is no classical causal explanation of these correlations and that quantum theory is necessary to explain the experimental results. In other words, something intrinsically quantum is happening.

In quantum foundations, Bell’s theorem has been a cornerstone of the field for 50 years. In 2015, Spekkens and his student Chris Wood published a very influential result: they showed that Bell inequalities can be used to pinpoint when classical ideas of cause and effect break down and quantum ones take over. This in turn helped launch causal inference as an active area in quantum foundations research. And thus did the trio of Perimeter researchers come to be working on causal inference.

Data scientists also want to find inequalities that characterize which correlations are consistent with a given causal structure, but for different reasons. When an epidemiologist or an economist makes a hypothesis about causal structure, it is necessarily conjectural. Certainly, it cannot be justified by an appeal to the finite speed of light, as happens in Bell-type experiments. Moreover, their systems cannot possibly be quantum. So, when their statistical data violates such an inequality, they can only draw one conclusion: their initial hypothesis about the causal structure must be mistaken and needs revision.

Given the variety of causal hypotheses pertinent to real-world problems, researchers would like to be able to determine the constraints on correlations for any given causal structure, and they would like to identify all such constraints. Finding an algorithm that can do so is a longstanding open problem of great importance. The inflation technique provides a solution. Using Wolfe and Navascués’ formal hierarchy, it is possible to discover every constraint implied by a causal structure up to any desired accuracy.

Two worlds meet

Spekkens, Wolfe, and Fritz made a point of submitting their paper to a journal of the causal inference community, rather than a physics journal.

As Spekkens explains: “There’s a danger that, if the communities are too disconnected, physicists could be reinventing the wheel without knowing that they’re doing so. If you can send your work to the Journal of Causal Inference and they tell you that it is new, you can be confident that you’re moving the field forward.”

Perimeter Institute researcher Tobias Fritz.[/caption]

In foundational quantum physics, the utility of the inflation technique was appreciated immediately. Physicist Nicolas Gisin of the University of Geneva, whose work spans quantum theory and experiment, said, “The inflation technique is a very new and promising tool. I expect it to become a standard tool in my field.” Still, Gisin cautioned that these are early days. “One needs to wait a bit and see how efficiently inflation can be programmed and how widely it can be applied.”

For their part, causal inference experts are enthusiastic. When Wolfe presented the work at the 7th Causal Inference Workshop at Uncertainty in Artificial Intelligence 2018, a key conference in the field, it elicited excitement – at the end of his talk, he was approached by more than a dozen members of the audience with questions about the new technique.

For computer scientist Ilya Shpitser, of Johns Hopkins University, a leading researcher in causal inference, the work constitutes a new and unusual way of thinking about a central problem in his field: “It appears as if the inflation technique is going to shed much light on this question or perhaps even solve it entirely!”

How inflation works

The inflation technique works like this: The researchers take the primary causal variables in the original causal diagram (the “root” variables) and consider a new causal diagram in which they are duplicated. Each original, single variable is thus transformed into several identical but independently distributed copies. It is possible to then reintroduce the dependent (non-root) variables on top of this inflated base, creating a so-called inflation graph in which every node corresponds to a copy of an original variable with a causal ancestry of precisely the same structure as in the original graph.

The power of the inflation technique to discover inequalities grows as the number of “root” copies increases. This technique is capable, in principle, of discovering every inequality implied by a given causal structure.

With modest computational resources (say, a laptop), Wolfe and Navascués’ hierarchy can be used to analyze various causal scenarios by considering inflation graphs with two or three copies of each root node. As the number of copies rises, the procedure becomes more computationally demanding. “We can talk about inflation level three, where we consider three copies of every root node, or inflation level four, where we consider four copies of every root node,” says Wolfe. “You get these larger and larger inflation graphs and more and more symmetry constraints which must be enforced to obtain the conclusions.”

On left: The triangle scenario, observed nodes in yellow, latent nodes in blue.

Centre: The web inflation of the triangle scenario, where each latent node is copied once, leading to quadrupled observed nodes.

Right: Spiral inflation of the triangle scenario.[/caption]

Wolfe hopes the technique will soon become part of the standard causality toolkit, allowing researchers with big classical datasets to zoom in and probe deeply into small subsections of their causal diagrams. For quantum researchers, the problem of certifying the “quantumness” of correlations is of great interest, and the technique is likely to have applications in quantum information science.

“Take the example of a communication network with a particular causal structure. If you haven’t figured out what are the constraints on correlations that are achievable classically in such a network, you might miss the fact that quantum theory offers an advantage for certain communication tasks,” says Spekkens. (To understand the extent to which any inequality can be violated within quantum theory, Wolfe and others later devised a special, quantum-specific version of the inflation technique, now on the arXiv.)

A bridge is built

Spekkens notes that ideas have been flowing in both directions between the fields of causal inference and quantum physics: “The set of concepts and the mathematical framework developed by the causal inference community provide a really fruitful new perspective on the conceptual problems of quantum theory. They open up a whole new set of questions for quantum theorists to ponder.”

Through the inflation work, physicists are returning the favour. Indeed, its authors expect that its most significant applications will be to problems outside of fundamental physics. “Once you realize that Bell’s theorem is an example of a result in causal inference, you realize that researchers in quantum foundations actually have 50 years of pertinent expertise,” Spekkens says.

Computer scientist Shpitser also sees the benefits of breaking down the disciplinary divide: “Inflation technique aside, my colleagues and I are very excited to see an increase in dialogue between physicists and causal inference researchers. Speaking for myself, I have already learned very much from their point of view on causality.”

Gisin echoes the sentiment: “Having a new community looking at the problem in new ways will profit the society at large. New tools and new students trained somewhat differently than today’s experts in ‘big data analysis’ may lead to breakthroughs in many fields.”

NOTE: This story has been updated from its original version to match the printed article from Inside the Perimeter Magazine.

Further exploration

About PI

Perimeter Institute is the world’s largest research hub devoted to theoretical physics. The independent Institute was founded in 1999 to foster breakthroughs in the fundamental understanding of our universe, from the smallest particles to the entire cosmos. Research at Perimeter is motivated by the understanding that fundamental science advances human knowledge and catalyzes innovation, and that today’s theoretical physics is tomorrow’s technology. Located in the Region of Waterloo, the not-for-profit Institute is a unique public-private endeavour, including the Governments of Ontario and Canada, that enables cutting-edge research, trains the next generation of scientific pioneers, and shares the power of physics through award-winning educational outreach and public engagement.

You might be interested in