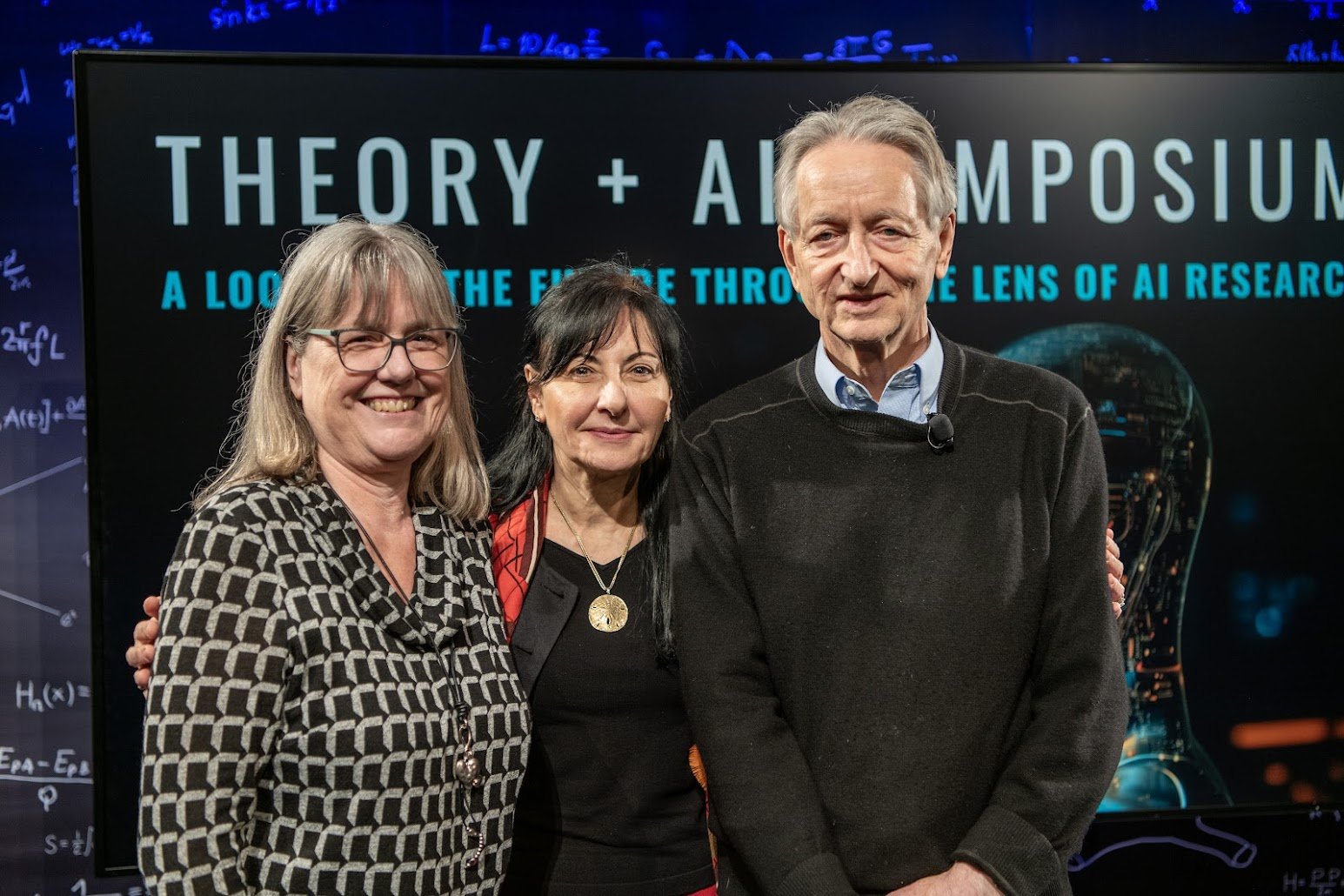

“It’s good to have a problem you want to solve, rather than a discipline you want to do,” Canadian Nobel Prize winner Geoffery Hinton told graduate students and postdoctoral researchers at Perimeter Institute on April 7, 2025.

Hinton, who is professor emeritus at the University of Toronto and chief scientific advisor at the Vector Institute for Artificial Intelligence spoke at the Theory + AI Symposium, a Perimeter 25th anniversary event.

Physicists, engineers, AI researchers, and entrepreneurs offered their perspectives on what the future of theoretical physics will look like in the age of artificial intelligence (AI), the engineering challenges ahead, and the exciting tools and collaborations evolving on today’s AI landscape.

Hinton, often described as one of the “godfathers of AI,” won the Nobel Prize in Physics in 2024 for his pioneering contributions to machine learning and artificial intelligence.

What’s remarkable is that he is not a physicist. He is a computer scientist who used statistical physics to take early steps in developing artificial neural networks. And it all began with his interest in a problem that was largely unrelated to either physics or computer science: How does the brain work?

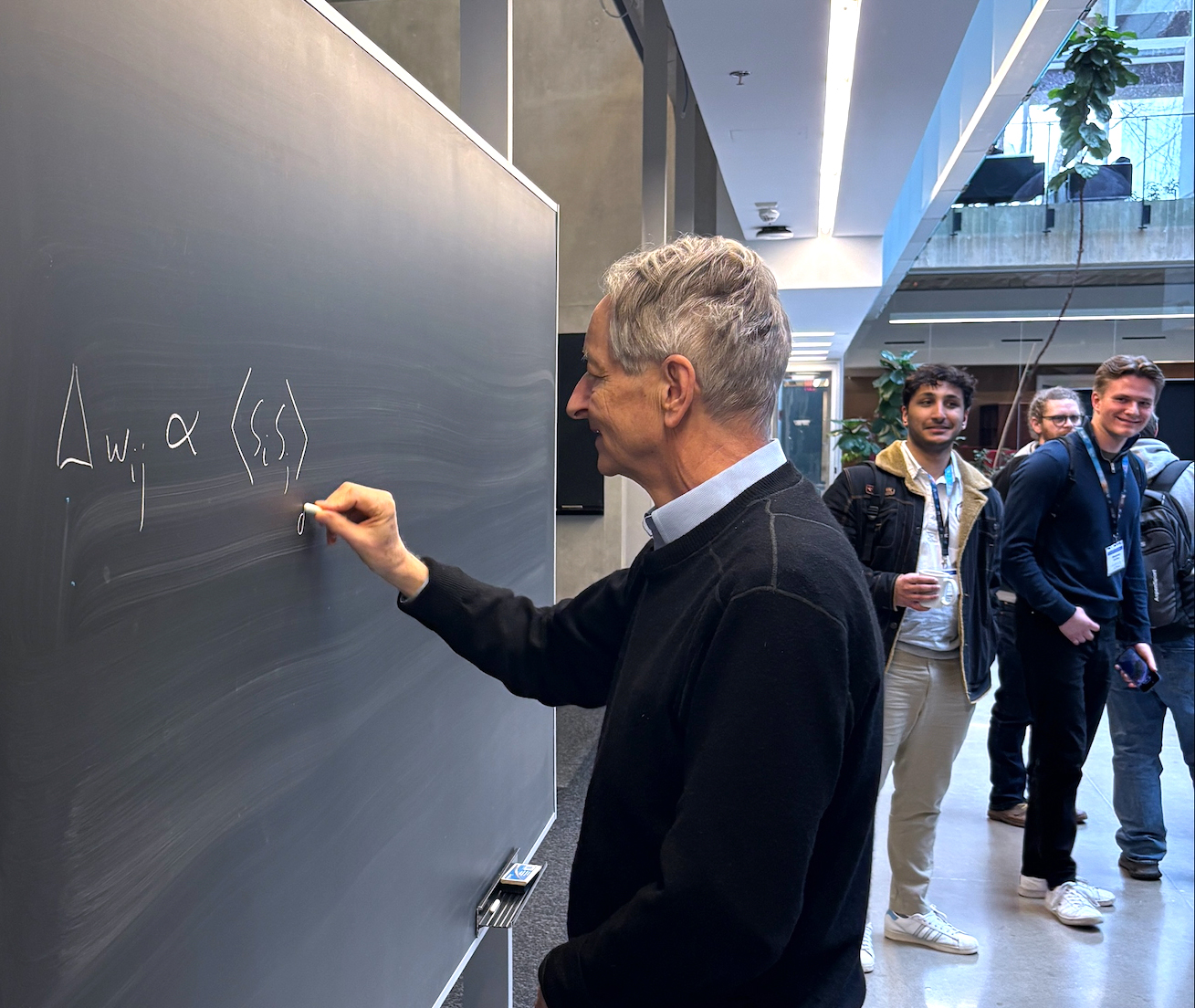

“I’ve always been interested in how the brain works, but I was disappointed with the answers I got from studying in various fields of science,” Hinton said during a colloquium on Boltzmann Machines.

After changing his degree a few times, he eventually got his BA in experimental psychology from the University of Cambridge. He even took physiology there, learning how the central nervous system works and how the brain uses sodium and potassium channels to create and maintain electrical signals across cell membranes.

But he was still unsatisfied. “I still didn’t know how the brain actually works.”

Nevertheless, his quest for answers led to machine learning. “I picked up some statistical physics in my attempts to understand how the brain works,” he said. Hinton got his PhD in artificial intelligence from the University of Edinburgh in 1978. That set him on a path that led to the Nobel Prize, although that wasn’t deliberate. He was a scientist in search of answers, and the great honour that followed was a surprise even to Hinton himself.

In the 1980s, Hinton, with his students and co-researchers, developed an extension of the Hopfield network, which was a type of artificial neural network invented by co-laureate John Hopfield, emeritus professor at Princeton University. It could store and recall patterns even from partial or noisy inputs.

The subsequent developments by Hinton and his colleagues led to the Boltzmann Machine, one of the earliest approaches to machine learning. (It is named after Austrian physicist Ludwig Boltzmann because it's based on the principles of statistical mechanics, specifically the Boltzmann distribution, which describes the probability of a system being in a particular state based on its energy.)

The early Boltzmann Machine used a lot of computational resources. It was not practical to scale it up. However, Hinton and his colleagues developed a slimmed down version called the Restricted Boltzmann Machine, where the artificial neurons could be stacked in a way that made it easier to train.

That work led to other machine learning developments in the 1990s, such as the wake-sleep algorithm, also developed by Hinton and his colleagues. The name of the algorithm is derived from its use of two learning phases, the “wake” phase and the “sleep” phase, which are performed alternately.

All of this was inspired by the workings of the brain, the subject that really fascinated Hinton from the start.

In simple terms, the brain’s nerve cells, called neurons, are constantly connecting and disengaging with one another using chemical and electrical signals sent through channels called synapses This is happening in your brain even as you read this sentence.

Artificial neural networks are of course much simpler than the networks of billions of biological neurons that are constantly firing or not firing based on the strengths of the connections. But they are similar, in that weights are applied to influence the strength of the connections of artificial neural networks. They are mimicking the way that biological neurons fire above a certain threshold.

This is how AIs “learn” from data rather than just follow preprogrammed instructions.

The work that Hinton and his AI colleagues did over the decades has also changed our perspectives on how language works.

There has been a long debate about whether the ability to acquire language is something that is “built in,” as linguist Noam Chomsky has proposed in his theories of “universal grammar,” or whether it is something that is learned through exposure to linguistic experience or data. This is still an ongoing debate, and Chomsky has criticized AI linguistic models as glorified “stochastic parrots”

Nevertheless, AI bots like ChatGPT have deepened our understanding of how we might arrive at “meaning.” What’s important is not the words, but the context or relationships between words.

Hinton provided an analogy.

Let’s say you are building a Lego model of a car. By putting together differently shaped Lego pieces, one into the other, you can create the shape you want. Now, imagine words as higher-dimensional Lego blocks with many connecting hands. Depending on the way the hands of the Lego blocks connect with one another, the shapes of the blocks change.

It would be as if 1000-dimensional Lego blocks are shaking hands with other multi-dimensional Lego blocks, with the blocks taking different shapes depending on how they connect.

Hinton gave an example. Take the word “scrummed.” A scrum can have different meanings. For example, it could mean a disorderly struggle. But if someone said: “She scrummed him with the frying pan,” the meaning changes. “Most people have a pretty good idea of what it means,” Hinton says.

“That is what it is to understand the meaning of a sentence,” Hinton said. This is how AIs can put together shapes, images, or predict the next word in a large language model.

The symposium also delved into how the use of AI tools will change the approach to discoveries in physics. A number of AI entrepreneurs from companies and institutes that are building AI, such as Anthropic, Google DeepMind, and Mila were also at the conference to share ideas and information about the latest developments.

Stephen Wolfram, founder of Wolfram Research, creator of Mathematica and the Alpha & Wolfram Language, gave a virtual talk during which he was asked whether young people getting their physics degrees now should pursue academia or entrepreneurship. Wolfram said that pursuing the entrepreneurial path was the right thing for him to do, but it may not be for everyone.

Like Hinton, Wolfram spent his life pursuing his interests, his dream.

“I am at least aspiring to, and I think achieving, living the AI dream, so to speak. We humans come up with things that we want to do, and then, somehow, we get AIs to automate getting those things done,” Wolfram said. “Well, I have been trying to build a stack of technology to make that possible for the past 45 years,” he added.

He said he primarily did this “because I wanted to have it for myself, to do all kinds of science.”

This is what he continues to do, and what AI researchers are doing today.

Perimeter Institute makes all of its major talks and lectures freely available to anyone around the world on its Perimeter Institute Recorded Seminar Archive. Check out the talks for this symposium at https://pirsa.org/c25020

About PI

Perimeter Institute is the world’s largest research hub devoted to theoretical physics. The independent Institute was founded in 1999 to foster breakthroughs in the fundamental understanding of our universe, from the smallest particles to the entire cosmos. Research at Perimeter is motivated by the understanding that fundamental science advances human knowledge and catalyzes innovation, and that today’s theoretical physics is tomorrow’s technology. Located in the Region of Waterloo, the not-for-profit Institute is a unique public-private endeavour, including the Governments of Ontario and Canada, that enables cutting-edge research, trains the next generation of scientific pioneers, and shares the power of physics through award-winning educational outreach and public engagement.

You might be interested in

Battle of the Big Bang: New Theories Changing How We Understand the Universe

June 5, 2025